GStreamer: Difference between revisions

| (47 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

GStreamer is a toolkit for building audio- and video-processing pipelines. A pipeline might stream video from a file to a network, or add an echo to a recording, or (most interesting to us) capture the output of a Video4Linux device. Gstreamer is most often used to power graphical applications such as [https://wiki.gnome.org/Apps/Videos Totem], but can also be used directly from the command-line. This page will explain why GStreamer is better than the alternatives, and how to build an encoder using its command-line interface. |

|||

GStreamer is a multimedia processing library which front end applications can leverage in order to provide a wide variety of functions such as audio and video playback, streaming, non-linear video editing and V4L2 capture support. It is also available for many other platforms for example for windows. |

|||

It is mainly used as API working in the background of applications as Totem. But with the comman-line-tools gst-launch and entrans it is also possible to directly create and use gstreamer-piplines on the commandline. |

|||

'''Before reading this page''', see [[V4L_capturing|V4L capturing]] to set your system up and record analogue sources to disk. This page discusses technical details about GStreamer's mechanics and how to build pipelines. |

|||

==Getting GStreamer== |

|||

GStreamer, the most common Gstreamer-plugins and the most important tools like gst-launch are available through your disttribution's package management. But entrans and some of the plugins used in the examples below are not. You can find theirf sources bundled by the [http://sourceforge.net/projects/gentrans/files/gst-entrans/ GEntrans project] at sourceforge. Google may help you to find precompiled packages for your distro. |

|||

There are the old GStreamer 0.10 and the new GStreamer 1.0. They are incompatible but can be installed parallel. Everything said below can be done with both GStreamer 0.10 and GStreamer 1.0. You simply have to use the appropriate commands, e.g. gst-launch-0.10 vs. gst-launch-1.0 (gst-launch is linked to one of them). Note the ffmpeg-plugins have been renamed to av. For example ffenc_mp2 of GStreamer 0.10 is called avenc_mp2 in GStreamer 1.0. |

|||

== Introduction to GStreamer == |

|||

==Using GStreamer for V4L TV capture== |

|||

No two use cases for encoding are quite alike. What's your preferred workflow? Is your processor fast enough to encode high quality video in real-time? Do you have enough disk space to store the raw video then process it after the fact? Do you want to play your video in DVD players, or is it enough that it works in your version of [http://www.videolan.org/vlc/index.en_GB.html VLC]? How will you work around your system's obscure quirks? |

|||

===Why preferring GStreamer?=== |

|||

Despite the fact that GStreamer is much more flexible than other solutions most other tools especially those which are based on the ffmpeg library (e.g. mencoder) have by design difficulties in managing A/V-synchronisation: The common tools process the audio and the video stream independently frame by frame as frames come in. Afterwards they are muxed relying on their specified framerates. E.g. if you have a 25fps video stream and a 48,000kHz audio stream it simply takes 1 video frame, 1920000 audio frames, 1 video frame and so on. This probably leeds to sync issues for at least three reasons: |

|||

* If frames get dropped audio and video shift against each other. For example if your CPU is not fast enough and sometimes drops a video frame after 25 dropped audio frames the video is one second in advance. (Using mencoder this can be fixed by usindg the -harddup option in most situations. It causes mencoder to wath if video frames are dropped and to copy other frames to fill the gaps before muxing.) |

|||

* The audio and video devices need different time to start the streams. For example after you have started capturing the first audioframe is grabbed 0.01 seconds thereafter while the first videoframe is grapped 0.7 seconds thereafter. This leads to constant time-offset between audio and video. (Using mencoder this can be fixed by using the -delay option. But you have to find out the appropriate delay by try and error. It won't be very precise. Another problem is that things very often change if you update your software as for example the buffers and timeouts of the drivers and the audioframework change. So you have to do it again and again after each update.) |

|||

* If the clocks of your audio source and your video source are not accurate enough (very common on low-cost home-user-products) and your webcam sends 25.02 fps instead of 25 fps and your audio source delivers 47,999kHz instead of 48,000kHz audio and video are going to slowly shift by time. The result is that after an hour or so of capturing audio and video differ by a second or so. But that's not only an issue of inaccurate clocks. Analogue TV is not completely analogue but the frames are discrete. Therefore video-capturing devices can't rely on their internal clocks but have to synchronize to the incoming signal. That's no problem if capturing live TV, but if you capture the signal of a VHS recorder. These recorders can't instantly change the speed of the tape but need to slightly adjust the fps for accurate tracking, especially if the quality of the tape is bad. (This issue can't be addressed using mencoder.) |

|||

To be more robust and accurate GStreamer attaches a timestamp to each incoming frame in the very beginning using the PCs clock. In the end muxing is done according to these timestamps. |

|||

Many container-formats for example Matroska support timestamps. Therefore the GStreamer timestamps are written to the file when cepturing to those formats. Others like avi can't handle timestamps. If you write streams with varying framerate (for example due to framedrops) to those files they are played back with varying speed (but never the less correct sync) as the timestamps get lost. To prevent thius you have to use the videorate-plugin in the end of the pipeline's video part and the audiorate-plugin in the end of the pipeline's audio part which produce streams with constant framerates by copying and dropping frames according to the timestamps. |

|||

To further improve the A/V-sync one should use the do-timestamp=true option for each capturing source. It gets the timestamps from the drivers instead of letteing GStreamer do the stamping. This is even more accurate as effects of the hardware-buffering are also taken into account. Even if the source doesn't support this (e.g. many v4l2-drivers doesn't support timestamping feature) there is no harm in doing this as GStreamer falls back to the normal behaviour in those cases. |

|||

'''Use GStreamer if''' you want the best video quality possible with your hardware, and don't mind spending a weekend browsing the Internet for information. |

|||

=== Entrans versus gst-launch=== |

|||

gst-launch is better documented and part of all distributions. But entrans is a bit smarter for the following reasons: |

|||

* It provides partly automatically composing of GStreamer pipelines |

|||

* It allows cutting of the streams; the most simple application of this feature is to capture for a distinct time. That allows the muxers to properly close the captured files writing correct headers which is not always given if you finissh capturing with gst-launch by simply typing Ctrl+C. To use this feature one has to insert a ''dam'' element after the first ''queue'' of each part of the pipeline. |

|||

'''Avoid GStreamer if''' you just want something quick-and-dirty, or can't stand programs with bad documentation and unhelpful error messages. |

|||

===Common caputuring issues and their solutions=== |

|||

* Most video capturing devices send an EndOfStream signal if the input is too bad or there is a brake of snow in the signal. This aborts the capturing-process. To prevent the device from sending an EOS in such cases use ''num-buffers=-1 |

|||

====Jerking==== |

|||

* If your hardware is not fast enough you probably get framedrops. An indication is high CPU load. If there are only few framedrops you can try to address the issue by enlarging the buffers. (If that doesn't help you have to change the codecs or its parameters and / or lower the resolution.) You have to enlarge the queues as well as the buffers of your sources, e.g. ''queue max-size-buffers=0 max-size-time=0 max-size-bytes=0'', ''v4l2src queue-size=16'', ''pulsesrc buffer-time=2000000'' Even if not necessary increasing the buffer-sizes doesn't do any harm. Big buffers only increase the pipeline's latency which doesn't matter for capturing purposes. |

|||

* Some muxers need big chunks of data at once. Therefore situations happen in which the muxer waits for example for more audio data and doesn't process any videodata. If this takes longer than filling up all the video buffers of the pipeline video frames get dropped. To prevent the resulting jerking one should enlarge all the buffers. ''queue max-size-buffers=0 max-size-time=0 max-size-bytes=0'' should disable any size-restrictions of the queue. But in fact there seems to be a hard-coded restriction in some cases. If needed you can work around this issue by adding additional ''queue'' elements to the pipeline. You can try out if to small buffers are the reason for your jerking by changing the pipeline to write the audio and the video stream into different files instead of muxing them together. If these files don't judder to small buffers are your problem. |

|||

* Many v4l2-drivers don't support timestamping. So even if ''do-timestamping=true'' is given GStreamer has to do the timestamping when it gets the frames from the driver. Most drivers tend to fill up their internal buffers and pass many frame as a cluster. This makes GStreamer giving all the frames of such a cluster (nearly) the same timestamp. As those inaccurances are typically very small this doesn't disturb A/V sync but leads to Jerking as the frames are played back in clusters if videorate isn't used. If videorate is used Jerking is even worse as videorate relying on the inaccurate timestamps drops all but one frame of each cluster and copies this frame to fill the gaps. If videorate is used with the ''silent=false'' option it reports many framedrops and framecopies even if the CPU load is low. To solve this problem use the ''stamp'' plugin between ''v4l2src'' and ''queue''. For example ''v4l2src do-timestamp=true ! stamp sync-margin=2 sync-interval=5 ! queue''. The stamp plugin inspects the buffers and uses a smart algorithm to correct the timestamps if the drivers doesn't support timestamping. Using the sync options ''stamp'' can additionally drop or copy frames to get a close to constant framerate. In most cases this doesn't completely replace videorate. It's safe to use videorate in the same pipeline. |

|||

=== Why is GStreamer better at encoding? === |

|||

====Capturing of disturbed video signals==== |

|||

* Most video capturing devices send EndOfStream singnals if the quality of the input signal is too bad or if there is a period of snow. This aborts the capturing process. To prevent the device from sending EOS set ''num-buffers=-1'' on the ''v4l2src'' element. |

|||

* The ''stamp'' plugin gets confused by periods of snow and does faulty timestamps and framedropping. This effect itself doesn't matter as stamp recovers normal behaviour when the brake is over. But chances are good that the buffers are full of old wird stamped frames. ''stamp'' then drops only one of them each sync-intervall with the result that it can take a quite long time (minutes) until everything works fine again. To solve this problem set ''leaky=2'' on each ''queue'' element to allow dropping of old frames which aren't needed any longer. |

|||

* If using variable bitrate for encoding the bitrate increases very much during periods of bad signal quality or snow. Afterwards the codec uses a very low bitrate to reach the desired average bitrate resulting in poor quality. To prevent this and stay in the limits that are allowed for the prupose you aim at don't forget to specify a maximum and a minimum for the variable bitrate. |

|||

GStreamer isn't as easy to use as <code>mplayer</code>, and doesn't have as advanced editing functionality as <code>ffmpeg</code>. But it has superior support for synchronising audio and video in disturbed sources such as VHS tapes. If you specify your input is (say) 25 frames per second video and 48,000Hz audio, most tools will synchronise audio and video simply by writing 1 video frame, 1,920 audio frames, 1 video frame and so on. There are at least three ways this can cause errors: |

|||

===Sample commands=== |

|||

* '''initialisation timing''': audio and video desynchronised by a certain amount from the first frame, usually caused by audio and video devices taking different amounts of time to initialise. For example, the first audio frame might be delivered to GStreamer 0.01 seconds after it was requested, but the first video frame might not be delivered until 0.7 seconds after it was requested, causing all video to be 0.6 seconds behind the audio |

|||

====Record to ogg theora==== |

|||

** <code>mencoder</code>'s ''-delay'' option solves this by delaying the audio |

|||

* '''failure to encode''': frames that desynchronise gradually over time, usually caused by audio and video shifting relative each other when frames are dropped. For example if your CPU is not fast enough and sometimes drops a video frame, after 25 dropped frames the video will be one second ahead of the audio |

|||

** <code>mencoder</code>'s ''-harddup'' option solves this by duplicating other frames to fill in the gaps |

|||

* '''source frame rate''': frames that aren't delivered at the advertised rate, usually caused by inaccurate clocks in the source hardware. For example, a low-cost webcam might deliver 25.01 video frames per second and 47,999Hz, causing your audio and video to drift apart by a second or so per hour |

|||

** video tapes are especially problematic here - if you've ever seen a VCR struggle during those few seconds between two recordings on a tape, you've seen them adjusting the tape speed to accurately track the source. Frame counts can vary enough during these periods to instantly desynchronise audio and video |

|||

** <code>mencoder</code> has no solution for this problem |

|||

GStreamer solves these problems by attaching a timestamp to each incoming frame based on the time GStreamer receives the frame. It can then mux the sources back together accurately using these timestamps, either by using a format that supports variable framerates or by duplicating frames to fill in the blanks: |

|||

gst-launch-0.10 oggmux name=mux ! filesink location=test0.ogg v4l2src device=/dev/video2 ! \ |

|||

# If you choose a container format that supports timestamps (e.g. Matroska), timestamps are automatically written to the file and used to vary the playback speed |

|||

video/x-raw-yuv,width=640,height=480,framerate=\(fraction\)30000/1001 ! ffmpegcolorspace ! \ |

|||

# If you choose a container format that does not support timestamps (e.g. AVI), you must duplicate other frames to fill in the gaps by adding the <code>videorate</code> and <code>audiorate</code> plugins to the end of the relevant pipelines |

|||

theoraenc ! queue ! mux. alsasrc device=hw:2,0 ! audio/x-raw-int,channels=2,rate=32000,depth=16 ! \ |

|||

audioconvert ! vorbisenc ! mux. |

|||

To get accurate timestamps, specify the <code>do-timestamp=true</code> option for all your sources. This will ensure accurate timestamps are retrieved from the driver where possible. Sadly, many v4l2 drivers don't support timestamps - GStreamer will add timestamps for these drivers to stop audio and video drifting apart, but you will need to fix the initialisation timing yourself (discussed below). |

|||

The files will play in mplayer, using the codec Theora. Note the required workaround to get sound on a saa7134 card, which is set at 32000Hz (cf. [http://pecisk.blogspot.com/2006/04/alsa-worries-countinues.html bug]). However, I was still unable to get sound output, though mplayer claimed there was sound -- the video is good quality: |

|||

Once you've encoded your video with GStreamer, you might want to ''transcode'' it with <code>ffmpeg</code>'s superior editing features. |

|||

VIDEO: [theo] 640x480 24bpp 29.970 fps 0.0 kbps ( 0.0 kbyte/s) |

|||

Selected video codec: [theora] vfm: theora (Theora (free, reworked VP3)) |

|||

AUDIO: 32000 Hz, 2 ch, s16le, 112.0 kbit/10.94% (ratio: 14000->128000) |

|||

Selected audio codec: [ffvorbis] afm: ffmpeg (FFmpeg Vorbis decoder) |

|||

=== |

=== Getting GStreamer === |

||

GStreamer, its most common plugins and tools are available through your distribution's package manager. Most Linux distributions include both the legacy ''0.10'' and modern ''1.0'' release series - each has bugs that stop them from working on some hardware, and this page focuses mostly on the legacy ''0.10'' series because it happened to work with my TV card. Converting the commands below to work with ''1.0'' is mostly just search-and-replace work (e.g. changing instances of <code>ff</code> to <code>av</code> because of the switch from <code>ffmpeg</code> to <code>libavcodec</code>). See [http://gstreamer.freedesktop.org/data/doc/gstreamer/head/manual/html/chapter-porting-1.0.html the porting guide] for more. |

|||

Or mpeg4 with an avi container (Debian has disabled ffmpeg encoders, so install Marillat's package or use example above): |

|||

Other plugins are also available, such as <code>[http://sourceforge.net/projects/gentrans/files/gst-entrans/ entrans]</code> (used in some examples below). Google might help you find packages for your distribution, otherwise you'll need to download and compile them yourself. |

|||

gst-launch-0.10 avimux name=mux ! filesink location=test0.avi v4l2src device=/dev/video2 ! \ |

|||

video/x-raw-yuv,width=640,height=480,framerate=\(fraction\)30000/1001 ! ffmpegcolorspace ! \ |

|||

ffenc_mpeg4 ! queue ! mux. alsasrc device=hw:2,0 ! audio/x-raw-int,channels=2,rate=32000,depth=16 ! \ |

|||

audioconvert ! lame ! mux. |

|||

=== Using GStreamer with gst-launch === |

|||

I get a file out of this that plays in mplayer, with blocky video and no sound. Avidemux cannot open the file. |

|||

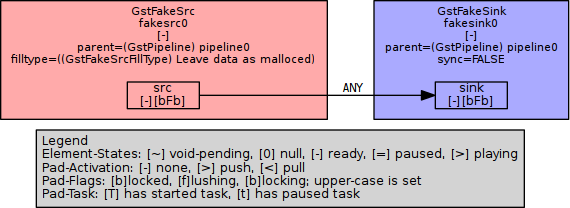

<code>gst-launch</code> is the standard command-line interface to GStreamer. Here's the simplest pipline you can build: |

|||

====Record to DVD-compliant MPEG2==== |

|||

gst-launch-0.10 fakesrc ! fakesink |

|||

entrans -s cut-time -c 0-180 -v -x '.*caps' --dam -- --raw \ |

|||

v4l2src queue-size=16 do-timestamp=true device=/dev/video0 norm=PAL-BG num-buffers=-1 ! stamp silent=false progress=0 sync-margin=2 sync-interval=5 ! \ |

|||

queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! dam ! \ |

|||

cogcolorspace ! videorate silent=false ! \ |

|||

'video/x-raw-yuv,width=720,height=576,framerate=25/1,interlaced=true,aspect-ratio=4/3' ! \ |

|||

queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! \ |

|||

ffenc_mpeg2video rc-buffer-size=1500000 rc-max-rate=7000000 rc-min-rate=3500000 bitrate=4000000 max-key-interval=15 pass=pass1 ! \ |

|||

queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! mux. \ |

|||

pulsesrc buffer-time=2000000 do-timestamp=true ! \ |

|||

queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! dam ! \ |

|||

audioconvert ! audiorate silent=false ! \ |

|||

audio/x-raw-int,rate=48000,channels=2,depth=16 ! \ |

|||

queue silent=false max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! \ |

|||

ffenc_mp2 bitrate=192000 ! \ |

|||

queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! mux. \ |

|||

ffmux_mpeg name=mux ! filesink location=my_recording.mpg |

|||

This connects a single (fake) source to a single (fake) sink using the 0.10 series of GStreamer: |

|||

This captures 3 minutes (180 seconds, see first line of the command) to ''my_recording.mpg'' and even works for bad input signals. |

|||

* I wasn't able to figure out how to produce a mpeg with ac3-sond as neither ffmux_mpeg nor mpegpsmux support ac3 streams at the moment. mplex does but I wasn't able to get it working as one needs very big buffers to prevent the pipeline from stalling and at least my GStreamer build didn't allow for such big buffers. |

|||

* The limited buffer size on my system is again the reason why I had to add a third queue element to the middle of the audio as well as of the video part of the pipeline to prevent jerking. |

|||

* In many HOWTOs you find ffmpegcolorspace instead of cogcolorspace. You can even use this but cogcolorspace is much faster. |

|||

* It seems to be important that the ''video/x-raw-yuv,width=720,height=576,framerate=25/1,interlaced=true,aspect-ratio=4/3''-statement is after ''videorate'' as videorate seems to drop the aspect-ratio-metadata otherwise resulting in files with aspect-ratio 1 in theis headers. Those files are probably played back warped and programs like dvdauthor complain. |

|||

[[File:GStreamer-simple-pipeline.png|center|Very simple pipeline]] |

|||

====Record to raw video==== |

|||

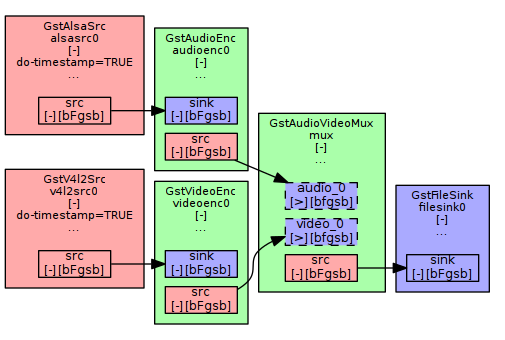

GStreamer can build all kinds of pipelines, but you probably want to build one that looks something like this: |

|||

If you don't care for sound, this simple version works for uncompressed video: |

|||

[[File:Example-pipeline.png|center|Idealised pipeline example]] |

|||

gst-launch-0.10 v4l2src device=/dev/video5 ! video/x-raw-yuv,width=640,height=480 ! avimux ! \ |

|||

filesink location=test0.avi |

|||

To get a list of elements that can go in a GStreamer pipeline, do: |

|||

tcprobe says this video-only file uses the I420 codec and gives the framerate as correct NTSC: |

|||

gst-inspect-0.10 | less |

|||

$ tcprobe -i test1.avi |

|||

Pass an element name to <code>gst-inspect-0.10</code> for detailed information. For example: |

|||

gst-inspect-0.10 fakesrc |

|||

gst-inspect-0.10 fakesink |

|||

If you install [http://www.graphviz.org Graphviz], you can build graphs like the above yourself: |

|||

mkdir gst-visualisations |

|||

GST_DEBUG_DUMP_DOT_DIR=gst-visualisations gst-launch-0.10 fakesrc ! fakesink |

|||

dot -Tpng gst-visualisations/*-gst-launch.PLAYING_READY.dot > my-pipeline.png |

|||

To get graphs of the example pipelines below, prepend <code>GST_DEBUG_DUMP_DOT_DIR=gst-visualisations </code> to the <code>gst-launch</code> command. Run this command to generate a PNG version of GStreamer's most interesting stage: |

|||

dot -Tpng gst-visualisations/*-gst-launch.PLAYING_READY.dot > my-pipeline.png |

|||

Remember to empty the <code>gst-visualisations</code> directory between runs. |

|||

=== Using GStreamer with entrans === |

|||

<code>gst-launch</code> is the main command-line interface to GStreamer, available by default. But <code>entrans</code> is a bit smarter: |

|||

* it provides partly-automated composition of GStreamer pipelines |

|||

* it allows you to cut streams, for example to capture for a predefined duration. That ensures headers are written correctly, which is not always the case if you close <code>gst-launch</code> by pressing Ctrl+C. To use this feature one has to insert a ''dam'' element after the first ''queue'' of each part of the pipeline |

|||

== Common caputuring issues and their solutions == |

|||

=== Reducing Jerkiness === |

|||

If motion that should appear smooth instead stops and starts, try the following: |

|||

'''Check for muxer issues'''. Some muxers need big chunks of data, which can cause one stream to pause while it waits for the other to fill up. Change your pipeline to pipe your audio and video directly to their own <code>filesink</code>s - if the separate files don't judder, the muxer is the problem. |

|||

* If the muxer is at fault, add ''! queue max-size-buffers=0 max-size-time=0 max-size-bytes=0'' immediately before each stream goes to the muxer |

|||

** queues have hard-coded maximum sizes - you can chain queues together if you need more buffering than one buffer can hold |

|||

'''Check your CPU load'''. When GStreamer uses 100% CPU, it may need to drop frames to keep up. |

|||

* If frames are dropped occasionally when CPU usage spikes to 100%, add a (larger) buffer to help smooth things out. |

|||

** this can be a source's internal buffer (e.g. ''v4l2src queue-size=16'' or ''alsasrc buffer-time=2000000''), or it can be an extra buffering step in your pipeline (''! queue max-size-buffers=0 max-size-time=0 max-size-bytes=0'') |

|||

* If frames are dropped when other processes have high CPU load, consider using [https://en.wikipedia.org/wiki/Nice_(Unix) nice] to make sure encoding gets CPU priority |

|||

* If frames are dropped regularly, use a different codec, change the parameters, lower the resolution, or otherwise choose a less resource-intensive solution |

|||

As a general rule, you should try increasing buffers first - if it doesn't work, it will just increase the pipeline's latency a bit. Be careful with <code>nice</code>, as it can slow down or even halt your computer. |

|||

'''Check for incorrect timestamps'''. If your video driver works by filling up an internal buffer then passing a cluster of frames without timestamps, GStreamer will think these should all have (nearly) the same timestamp. Make sure you have a <code>videorate</code> element in your pipeline, then add ''silent=false'' to it. If it reports many framedrops and framecopies even when the CPU load is low, the driver is probably at fault. |

|||

* <code>videorate</code> on its own will actually make this problem worse by picking one frame and replacing all the others with it. Instead install <code>entrans</code> and add its ''stamp'' element between ''v4l2src'' and ''queue'' (e.g. ''v4l2src do-timestamp=true ! stamp sync-margin=2 sync-interval=5 ! videorate ! queue'') |

|||

** ''stamp'' intelligently guesses timestamps if drivers don't support timestamping. Its ''sync-'' options drop or copy frames to get a nearly-constant framerate. Using <code>videorate</code> as well does no harm and can solve some remaining problems |

|||

=== Avoiding pitfalls with video noise === |

|||

If your video contains periods of [https://en.wikipedia.org/wiki/Noise_(video) video noise] (snow), you may need to deal with some extra issues: |

|||

* Most devices send an EndOfStream signal if the input signal quality drops too low, causing GStreamer to finish capturing. To prevent the device from sending EOS, set ''num-buffers=-1'' on the ''v4l2src'' element. |

|||

* The ''stamp'' plugin gets confused by periods of snow, causing it to generate faulty timestamps and framedropping. ''stamp'' will recover normal behaviour when the break is over, but will probably leave the buffer full of weirdly-stamped frames. ''stamp'' only drops one weirdly-stamped frame each sync-interval, so it can take several minutes until everything works fine again. To solve this problem, set ''leaky=2'' on each ''queue'' element to allow dropping old frames |

|||

* Periods of noise (snow, bad signal etc.) are hard to encode. Variable bitrate encoders will often drive up the bitrate during the noise then down afterwards to maintain the average bitrate. To minimise the issues, specify a minimum and maximum bitrate in your encoder |

|||

* Snow at the start of a recording is just plain ugly. To get black input instead from a VCR, use the remote control to change the input source before you start recording |

|||

=== Working around bugs in GStreamer === |

|||

Sadly, you will probably run into several GStreamer bugs while creating a pipeline. Worse, the debugging information usually isn't enough to diagnose problems. Debugging usually goes something like this: |

|||

# see that <code>gst-launch</code> is failing to initialise, and gives no useful error message |

|||

# try similar pipelines - add, change and remove large parts until you find something that works |

|||

#* if possible, start with the working version and make one change at a time until you identify a single change that triggers the problem |

|||

# run the simplest failing pipeline with <code>--gst-debug=3</code> (or higher if necessary) |

|||

# find an error message that looks relevant, search the Internet for information about it |

|||

# try more variations based on what you learnt, until you eventually find something that works |

|||

For example, here's the basic outline of how I diagnosed the need to use ''format=UYVY'' (minus several wrong turns and silly mistakes): |

|||

<ol> |

|||

<li>Made a simple pipeline to encode video in GStreamer 1.0: |

|||

<pre>gst-launch-1.0 v4l2src device=$VIDEO_DEVICE ! video/x-raw,interlaced=true,width=720,height=576 ! autovideosink</pre> |

|||

* this opened a window, but never played any video frames into it |

|||

<li> Tried the same pipeline in GStreamer 0.10: |

|||

<pre>gst-launch-0.10 v4l2src device=$VIDEO_DEVICE ! video/x-raw-yuv,interlaced=true,width=720,height=576 ! autovideosink</pre> |

|||

* this worked, proving GStreamer is capable of processing video |

|||

<li> Reran the failing command with <code>--gst-debug=3</code>: |

|||

<pre>gst-launch-1.0 --gst-debug=3 v4l2src device=$VIDEO_DEVICE ! video/x-raw,interlaced=true,width=720,height=576 ! autovideosink</pre> |

|||

* this produced some error messages that didn't mean anything to me |

|||

<li> Searched on Google for <code>"gst_video_frame_map_id: failed to map video frame plane 1"</code> (with quotes)<br> |

|||

* this returned [http://lists.freedesktop.org/archives/gstreamer-devel/2015-February/051678.html a discussion of the problem] |

|||

<li> Read through that thread<br> |

|||

* Most of it went over my head, but I understood it was a GStreamer bug related to the YUV format |

|||

<li> Read the <code>v4l2src</code> description for supported formats: |

|||

<pre>gst-inspect-1.0 v4l2src | less</pre> |

|||

<li> Tried every possible format, made a note of which ones worked: |

|||

<pre>for FORMAT in RGB15 RGB16 BGR RGB BGRx BGRA xRGB ARGB GRAY8 YVU9 YV12 YUY2 UYVY Y42B Y41B NV12_64Z32 YUV9 I420 YVYU NV21 NV12 |

|||

do |

|||

echo FORMAT: $FORMAT |

|||

gst-launch-1.0 v4l2src device=$VIDEO_DEVICE ! video/x-raw,format=$FORMAT,interlaced=true,width=720,height=576 ! autovideosink |

|||

done</pre> |

|||

* ''YUY2'' and ''UYVY'' worked, the others failed to initialise or opened a window but failed to play video |

|||

<li> Searched on Google for information about ''YUY2'' and ''UYVY'', as well as ''YUV9'' and ''YUV12'', which seemed to be related |

|||

* eventually found [http://forum.videohelp.com/threads/10031-Which-format-to-use-24-bit-RGB-YUV2-YUV9-YUV12?s=92a0bbf59497ea5c21528e614e7ad1a6&p=38242&viewfull=1#post38242 a post saying they're all compatible] |

|||

<li> compared the output of the formats that worked to the GStreamer 0.10 baseline: |

|||

<pre>gst-launch-1.0 v4l2src device=$VIDEO_DEVICE ! video/x-raw, format=YUY2, interlaced=true, width=720, height=576 ! videoconvert ! x264enc ! matroskamux ! filesink location=1-yuy2.mkv |

|||

gst-launch-1.0 v4l2src device=$VIDEO_DEVICE ! video/x-raw, format=UYVY, interlaced=true, width=720, height=576 ! videoconvert ! x264enc ! matroskamux ! filesink location=1-uyvy.mkv |

|||

gst-launch-0.10 v4l2src device=$VIDEO_DEVICE ! video/x-raw-yuv, interlaced=true, width=720, height=576 ! x264enc ! matroskamux ! filesink location=10.mkv</pre> |

|||

* killed each after 5 seconds then played them side-by-side - they looked similar to my eye, but the ''1.0'' files played at ''720x629'' resolution |

|||

<li> Re-read the <code>v4l2src</code> description: |

|||

<pre>gst-inspect-1.0 v4l2src | less</pre> |

|||

* found the <code>pixel-aspect-ratio</code> setting |

|||

<li> arbitrarily chose <code>format=UYVY</code> and tried again with a defined pixel aspect ratio: |

|||

<pre>gst-launch-1.0 v4l2src device=$VIDEO_DEVICE pixel-aspect-ratio=1 \ |

|||

! video/x-raw, format=UYVY, interlaced=true, width=720, height=576 \ |

|||

! videoconvert \ |

|||

! x264enc \ |

|||

! matroskamux \ |

|||

! filesink location=1-uyvy.mkv</pre> |

|||

* it worked! |

|||

</ol> |

|||

== Sample pipelines == |

|||

At some point, you will probably need to build your own GStreamer pipeline. This section contains examples to give you the basic idea. |

|||

Note: for consistency and ease of copy/pasting, all filenames in this section are of the form <code>test-$( date --iso-8601=seconds )</code> - your shell should automatically convert this to e.g. <code>test-2010-11-12T13:14:15+1600.avi</code> |

|||

=== Record raw video only === |

|||

A simple pipeline that initialises one video ''source'', sets the video format, ''muxes'' it into a file format, then saves it to a file: |

|||

gst-launch-0.10 \ |

|||

v4l2src do-timestamp=true device=$VIDEO_DEVICE \ |

|||

! video/x-raw-yuv,width=640,height=480 \ |

|||

! avimux |

|||

! filesink location=test-$( date --iso-8601=seconds ).avi |

|||

<code>tcprobe</code> says this video-only file uses the I420 codec and gives the framerate as correct NTSC: |

|||

$ tcprobe -i test-*.avi |

|||

[tcprobe] RIFF data, AVI video |

[tcprobe] RIFF data, AVI video |

||

[avilib] V: 29.970 fps, codec=I420, frames=315, width=640, height=480 |

[avilib] V: 29.970 fps, codec=I420, frames=315, width=640, height=480 |

||

[tcprobe] summary for |

[tcprobe] summary for test-(date).avi, (*) = not default, 0 = not detected |

||

import frame size: -g 640x480 [720x576] (*) |

import frame size: -g 640x480 [720x576] (*) |

||

frame rate: -f 29.970 [25.000] frc=4 (*) |

frame rate: -f 29.970 [25.000] frc=4 (*) |

||

| Line 106: | Line 195: | ||

The files will play in mplayer, using the codec [raw] RAW Uncompressed Video. |

The files will play in mplayer, using the codec [raw] RAW Uncompressed Video. |

||

== |

=== Record to ogg theora === |

||

Here is a more complex example that initialises two sources - one video and audio: |

|||

gst-launch-0.10 v4l2src use-fixed-fps=false ! video/x-raw-yuv,format=\(fourcc\)UYVY,width=320,height=240 \ |

|||

! ffmpegcolorspace ! ximagesink |

|||

gst-launch-0.10 \ |

|||

gst-launch-0.10 v4lsrc autoprobe-fps=false device=/dev/video0 ! "video/x-raw-yuv, width=160, height=120, \ |

|||

v4l2src do-timestamp=true device=$VIDEO_DEVICE \ |

|||

framerate=10, format=(fourcc)I420" ! xvimagesink |

|||

! video/x-raw-yuv,width=640,height=480,framerate=\(fraction\)30000/1001 \ |

|||

! ffmpegcolorspace \ |

|||

! theoraenc \ |

|||

! queue \ |

|||

! mux. \ |

|||

alsasrc do-timestamp=true device=$AUDIO_DEVICE \ |

|||

! audio/x-raw-int,channels=2,rate=32000,depth=16 \ |

|||

! audioconvert \ |

|||

! vorbisenc \ |

|||

! mux. \ |

|||

oggmux name=mux \ |

|||

! filesink location=test-$( date --iso-8601=seconds ).ogg |

|||

Each source is encoded and piped into a <i>muxer</i> that builds an ogg-formatted data stream. The stream is then saved to <code>test-$( date --iso-8601=seconds ).ogg</code>. Note the required workaround to get sound on a saa7134 card, which is set at 32000Hz (cf. [http://pecisk.blogspot.com/2006/04/alsa-worries-countinues.html bug]). However, I was still unable to get sound output, though mplayer claimed there was sound -- the video is good quality: |

|||

==Converting formats== |

|||

VIDEO: [theo] 640x480 24bpp 29.970 fps 0.0 kbps ( 0.0 kbyte/s) |

|||

To convert the files to matlab (didn't work for me): |

|||

Selected video codec: [theora] vfm: theora (Theora (free, reworked VP3)) |

|||

AUDIO: 32000 Hz, 2 ch, s16le, 112.0 kbit/10.94% (ratio: 14000->128000) |

|||

Selected audio codec: [ffvorbis] afm: ffmpeg (FFmpeg Vorbis decoder) |

|||

=== Record to mpeg4 === |

|||

mencoder test0.avi -ovc raw -vf format=bgr24 -o test0m.avi -ffourcc none |

|||

This is similar to the above, but generates an AVI file with streams encoded using AVI-compatible encoders: |

|||

For details, see gst-launch and google; the plugins in particular are poorly documented so far. |

|||

gst-launch-0.10 \ |

|||

v4l2src do-timestamp=true device=$VIDEO_DEVICE \ |

|||

! video/x-raw-yuv,width=640,height=480,framerate=\(fraction\)30000/1001 \ |

|||

! ffmpegcolorspace \ |

|||

! ffenc_mpeg4 \ |

|||

! queue \ |

|||

! mux. \ |

|||

alsasrc do-timestamp=true device=$AUDIO_DEVICE \ |

|||

! audio/x-raw-int,channels=2,rate=32000,depth=16 \ |

|||

! audioconvert \ |

|||

! lame \ |

|||

! mux. \ |

|||

avimux name=mux \ |

|||

! filesink location=test-$( date --iso-8601=seconds ).avi |

|||

I get a file out of this that plays in mplayer, with blocky video and no sound. Avidemux cannot open the file. |

|||

=== High quality video === |

|||

gst-launch-0.10 -q -e \ |

|||

v4l2src device=$VIDEO_DEVICE do-timestamp=true norm=PAL-I \ |

|||

! queue max-size-buffers=0 max-size-time=0 max-size-bytes=0 \ |

|||

! video/x-raw-yuv,interlaced=true,width=720,height=576 \ |

|||

! x264enc interlaced=true pass=quant option-string=quantizer=0 speed-preset=ultrafast byte-stream=true \ |

|||

! progressreport update-freq=1 \ |

|||

! mux. \ |

|||

alsasrc device=$AUDIO_DEVICE do-timestamp=true \ |

|||

! queue max-size-buffers=0 max-size-time=0 max-size-bytes=0 \ |

|||

! audio/x-raw-int,depth=16,rate=32000 \ |

|||

! flacenc \ |

|||

! mux. \ |

|||

matroskamux name=mux min-index-interval=1000000000 \ |

|||

! filesink location=test-$( date --iso-8601=seconds ).mkv |

|||

This creates a file with 720x576 video and 32kHz audio, using FLAC audio and x264 video in lossless mode, muxed into in a Matroska container. It doesn't need much CPU and should be a faithful representation of the source, but the files can be 30GB per hour and it's not widely supported. Consider transcoding to a smaller/more compatible format after encoding. |

|||

Some things to note about this pipeline: |

|||

* <code>quantizer=0</code> sets the video codec to lossless mode. Anything up to <code>quantizer=18</code> should not lose information visible to the human eye, and will produce much smaller files |

|||

* <code>speed-preset=ultrafast</code> sets the video codec to prioritise speed at the cost of file size. <code>speed-preset=veryslow</code> is the opposite, and there are various presets in between |

|||

* The Matroska format supports variable framerates, which can be useful for VHS tapes that might not deliver the same number of frames each second |

|||

* <code>min-index-interval=1000000000</code> improves seek times by telling the Matroska muxer to create one ''cue data'' entry per second of playback. Cue data is a few kilobytes per hour, added to the end of the file when encoding completes. If you try to watch your Matroska video while it's being recorded, it will take a long time to skip forward/back because the cue data hasn't been written yet |

|||

=== GStreamer 1.0: record from a bad analog signal to MJPEG video and RAW mono audio === |

|||

''stamp'' is not available in GStreamer 1.0, ''cogcolorspace'' and ''ffmpegcolorspace'' have been replaced by ''videoconvert'': |

|||

gst-launch-1.0 \ |

|||

v4l2src do-timestamp=true device=$VIDEO_DEVICE do-timestamp=true \ |

|||

! 'video/x-raw,format=(string)YV12,width=(int)720,height=(int)576' \ |

|||

! videorate \ |

|||

! 'video/x-raw,format=(string)YV12,framerate=25/1' \ |

|||

! videoconvert \ |

|||

! 'video/x-raw,format=(string)YV12,width=(int)720,height=(int)576' \ |

|||

! jpegenc \ |

|||

! queue \ |

|||

! mux. \ |

|||

alsasrc do-timestamp=true device=$AUDIO_DEVICE \ |

|||

! 'audio/x-raw,format=(string)S16LE,rate=(int)48000,channels=(int)2' \ |

|||

! audiorate \ |

|||

! audioresample \ |

|||

! 'audio/x-raw,rate=(int)44100' \ |

|||

! audioconvert \ |

|||

! 'audio/x-raw,channels=(int)1' \ |

|||

! queue \ |

|||

! mux. \ |

|||

avimux name=mux ! filesink location=test-$( date --iso-8601=seconds ).avi |

|||

As stated above, it is best to use both audiorate and videorate: you problably use the same chip to capture both audio stream and video stream so the audio part is subject to disturbance as well. |

|||

=== View pictures from a webcam === |

|||

Here are some miscellaneous examples for viewing webcam video: |

|||

gst-launch-0.10 \ |

|||

v4l2src do-timestamp=true use-fixed-fps=false \ |

|||

! video/x-raw-yuv,format=\(fourcc\)UYVY,width=320,height=240 \ |

|||

! ffmpegcolorspace \ |

|||

! autovideosink |

|||

gst-launch-0.10 \ |

|||

v4lsrc do-timestamp=true autoprobe-fps=false device=$VIDEO_DEVICE \ |

|||

! "video/x-raw-yuv,format=(fourcc)I420,width=160,height=120,framerate=10" \ |

|||

! autovideosink |

|||

=== Entrans: Record to DVD-compliant MPEG2 === |

|||

entrans -s cut-time -c 0-180 -v -x '.*caps' --dam -- --raw \ |

|||

v4l2src queue-size=16 do-timestamp=true device=$VIDEO_DEVICE norm=PAL-BG num-buffers=-1 \ |

|||

! stamp silent=false progress=0 sync-margin=2 sync-interval=5 \ |

|||

! queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 \ |

|||

! dam \ |

|||

! cogcolorspace \ |

|||

! videorate silent=false \ |

|||

! 'video/x-raw-yuv,width=720,height=576,framerate=25/1,interlaced=true,aspect-ratio=4/3' \ |

|||

! queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 \ |

|||

! ffenc_mpeg2video rc-buffer-size=1500000 rc-max-rate=7000000 rc-min-rate=3500000 bitrate=4000000 max-key-interval=15 pass=pass1 \ |

|||

! queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 \ |

|||

! mux. \ |

|||

pulsesrc buffer-time=2000000 do-timestamp=true \ |

|||

! queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 \ |

|||

! dam \ |

|||

! audioconvert \ |

|||

! audiorate silent=false \ |

|||

! audio/x-raw-int,rate=48000,channels=2,depth=16 \ |

|||

! queue silent=false max-size-buffers=0 max-size-time=0 max-size-bytes=0 \ |

|||

! ffenc_mp2 bitrate=192000 \ |

|||

! queue silent=false leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 \ |

|||

! mux. \ |

|||

ffmux_mpeg name=mux \ |

|||

! filesink location=test-$( date --iso-8601=seconds ).mpg |

|||

This captures 3 minutes (180 seconds, see first line of the command) to ''test-$( date --iso-8601=seconds ).mpg'' and even works for bad input signals. |

|||

* I wasn't able to figure out how to produce a mpeg with ac3-sound as neither ffmux_mpeg nor mpegpsmux support ac3 streams at the moment. mplex does but I wasn't able to get it working as one needs very big buffers to prevent the pipeline from stalling and at least my GStreamer build didn't allow for such big buffers. |

|||

* The limited buffer size on my system is again the reason why I had to add a third queue element to the middle of the audio as well as of the video part of the pipeline to prevent jerking. |

|||

* In many HOWTOs you find ffmpegcolorspace instead of cogcolorspace. You can even use this but cogcolorspace is much faster. |

|||

* It seems to be important that the ''video/x-raw-yuv,width=720,height=576,framerate=25/1,interlaced=true,aspect-ratio=4/3''-statement is after ''videorate'' as videorate seems to drop the aspect-ratio-metadata otherwise resulting in files with aspect-ratio 1 in theis headers. Those files are probably played back warped and programs like dvdauthor complain. |

|||

== Ready-made scripts == |

|||

Although no two use cases are the same, it can be useful to see scripts used by other people. These can fill in blanks and provide inspiration for your own work. |

|||

=== Bash script to record video tapes with GStreamer (work-in-progress) === |

|||

Note: as of August 2015, this script is still being fine-tuned. Come back in a month or two to see the final version. |

|||

This example encapsulates a whole workflow - encoding with GStreamer, transcoding with ffmpeg and opportunities to edit the audio by hand. The default GStreamer command is similar to [[GStreamer#High_quality_video|this]], and by default ffmpeg converts it to MPEG4 video and MP3 audio in an AVI container. |

|||

The script has been designed so most people should only need to edit the config file, and even includes a more usable version of the commands from [[GStreamer#Getting_your_device_capabilities|getting your device capabilities]]. In general, you should first run the script with <code>--init</code> to create the config file, then edit that file by hand with help from <code>--caps</code> and <code>--profile</code>, then record with <code>--record</code> and transcode with a generated <code>remaster</code> script. |

|||

Search the script for <code>CMD</code> to find the interesting bits. Although the script is quite complex, most of it is just fluff to improve progress information etc. |

|||

<nowiki>#!/bin/bash |

|||

# |

|||

# Encode a video using either the 0.1 or 1.0 series of GStreamer |

|||

# (each has bugs that break encoding on different cards) |

|||

# |

|||

# Also uses `v4l2-ctl` (from the v4l-utils package) to set the input source, |

|||

# and `ffmpeg` to remaster the file |

|||

# |

|||

# Approximate system requirements for maximum quality settings: |

|||

# * about 5-10GB disk space for every hour of the initial recording |

|||

# * about 4-8GB disk space for every hour of remastered recordings |

|||

# * 1.5GHz processor |

|||

HELP_MESSAGE="Usage: $0 --init |

|||

$0 --caps |

|||

$0 --profile |

|||

$0 --record <directory> |

|||

$0 --kill <directory> <timeout> |

|||

$0 --remaster <remaster-script> |

|||

Record a video into a directory (one directory per video). |

|||

--init create an initial ~/.v4l-record-scriptrc |

|||

please edit this file before your first recording |

|||

--caps show audio and video capabilities for your device |

|||

--profile update ~/.v4l-record-scriptrc with your system's noise profile |

|||

pause a tape or tune to a silent channel for the best profile |

|||

--record create a faithful recording in the specified directory |

|||

--kill stop the recording in <directory> after <timeout> |

|||

see \`man sleep\` for details about allowed time formats |

|||

--remaster create remastered recordings based on the initial recording |

|||

" |

|||

CONFIGURATION='# |

|||

# CONFIGURATION FOR GSTREAMER RECORD SCRIPT |

|||

# For more information, see http://www.linuxtv.org/wiki/index.php/GStreamer |

|||

# |

|||

# |

|||

# VARIABLES YOU NEED TO EDIT |

|||

# Every system and every use case is slightly different. |

|||

# Here are the things you will probably need to change: |

|||

# |

|||

# Set these based on your hardware/location: |

|||

VIDEO_DEVICE=${VIDEO_DEVICE:-/dev/video0} # `ls /dev/video*` for a list |

|||

AUDIO_DEVICE=${AUDIO_DEVICE:-hw:CARD=SAA7134,DEV=0} # `arecord -L` for a list |

|||

NORM=${NORM:-PAL} # (search Wikipedia for the exact norm in your country) |

|||

VIDEO_INPUT="${VIDEO_INPUT:-1}" # composite input - `v4l2-ctl --device=$VIDEO_DEVICE --list-inputs` for a list |

|||

# PAL video is approximately 720x576 resolution. VHS tapes have about half the horizontal quality, but this post convinced me to encode at 720x576 anyway: |

|||

# http://forum.videohelp.com/threads/215570-Sensible-resolution-for-VHS-captures?p=1244415#post1244415 |

|||

# Run `'"$0"' --caps` to find your supported width, height and bitrate: |

|||

SOURCE_WIDTH="${SOURCE_WIDTH:-720}" |

|||

SOURCE_HEIGHT="${SOURCE_HEIGHT:-576}" |

|||

AUDIO_BITRATE="${AUDIO_BITRATE:-32000}" |

|||

# For systems that do not automatically handle audio/video initialisation times: |

|||

AUDIO_DELAY="$AUDIO_DELAY" |

|||

# |

|||

# VARIABLES YOU MIGHT NEED TO EDIT |

|||

# These are defined in the script, but you can override them here if you need non-default values: |

|||

# |

|||

# set this to 1.0 to use the more recent version of GStreamer: |

|||

#GST_VERSION=0.10 |

|||

# Set these to alter the recording quality: |

|||

#GST_X264_OPTS="..." |

|||

#GST_FLAC_OPTS="..." |

|||

# Set these to control the audio/video pipelines: |

|||

#GST_QUEUE="..." |

|||

#GST_VIDEO_CAPS="..." |

|||

#GST_AUDIO_CAPS="..." |

|||

#GST_VIDEO_SRC="..." |

|||

#GST_AUDIO_SRC="..." |

|||

# ffmpeg has better remastering tools: |

|||

#FFMPEG_DENOISE_OPTS="..." # edit depending on your tape quality |

|||

#FFMPEG_VIDEO_OPTS="..." |

|||

#FFMPEG_AUDIO_OPTS="..." |

|||

# Reducing noise: |

|||

#GLOBAL_NOISE_AMOUNT=0.21 |

|||

# |

|||

# VARIABLES SET AUTOMATICALLY |

|||

# |

|||

# Once you have set the above, record a silent source (e.g. a paused tape or silent TV channel) |

|||

# then call '"$0"' --profile to build the global noise profile |

|||

' |

|||

# |

|||

# CONFIGURATION SECTION |

|||

# |

|||

CONFIG_SCRIPT="$HOME/.v4l-record-scriptrc" |

|||

[ -e "$CONFIG_SCRIPT" ] && source "$CONFIG_SCRIPT" |

|||

source <( echo "$CONFIGURATION" ) |

|||

GST_VERSION="${GST_VERSION:-0.10}" # or 1.0 |

|||

# `gst-inspect` has more information here too: |

|||

GST_X264_OPTS="interlaced=true pass=quant option-string=qpmin=0:qpmax=0 speed-preset=ultrafast tune=zerolatency byte-stream=true" |

|||

GST_FLAC_OPTS="" |

|||

GST_MKV_OPTS="min-index-interval=2000000000" # also known as "cue data", this makes seeking faster |

|||

# this doesn't really matter, and isn't required in 1.0: |

|||

case "$GST_VERSION" in |

|||

0.10) |

|||

GST_VIDEO_FORMAT="-yuv" |

|||

GST_AUDIO_FORMAT="-int" |

|||

;; |

|||

1.0) |

|||

GST_VIDEO_FORMAT="" |

|||

GST_AUDIO_FORMAT="" |

|||

;; |

|||

*) |

|||

echo "Please specify 'GST_VERSION' of '0.10' or '1.0', not '$GST_VERSION'" |

|||

exit 1 |

|||

;; |

|||

esac |

|||

# `gst-inspect-0.10 <element> | less -i` for a list of properties (e.g. `gst-inspect-0.10 v4l2src | less -i`): |

|||

GST_QUEUE="${GST_QUEUE:-queue max-size-buffers=0 max-size-time=0 max-size-bytes=0}" |

|||

GST_VIDEO_CAPS="${GST_VIDEO_CAPS:-video/x-raw$GST_VIDEO_FORMAT,interlaced=true,width=$SOURCE_WIDTH,height=$SOURCE_HEIGHT}" |

|||

GST_AUDIO_CAPS="${GST_AUDIO_CAPS:-audio/x-raw$GST_AUDIO_FORMAT,depth=16,rate=$AUDIO_BITRATE}" |

|||

GST_VIDEO_SRC="${GST_VIDEO_SRC:-v4l2src device=$VIDEO_DEVICE do-timestamp=true norm=$NORM ! $GST_QUEUE ! $GST_VIDEO_CAPS}" |

|||

GST_AUDIO_SRC="${GST_AUDIO_SRC:-alsasrc device=$AUDIO_DEVICE do-timestamp=true ! $GST_QUEUE ! $GST_AUDIO_CAPS}" |

|||

# `ffmpeg -h full` for more information: |

|||

FFMPEG_DENOISE_OPTS="hqdn3d=luma_spatial=6:2:luma_tmp=20" # based on an old VHS tape, with recordings in LP mode |

|||

FFMPEG_VIDEO_OPTS="${FFMPEG_VIDEO_OPTS:--flags +ilme+ildct -c:v mpeg4 -q:v 3 -vf il=d,$FFMPEG_DENOISE_OPTS,il=i,crop=(iw-10):(ih-14):3:0,pad=iw:ih:(ow-iw)/2:(oh-ih)/2}" |

|||

FFMPEG_AUDIO_OPTS="${FFMPEG_AUDIO_OPTS:--c:a libmp3lame -b:a 256k}" # note: for some reason, ffmpeg desyncs audio and video if "-q:a" is used instead of "-b:a" |

|||

# |

|||

# UTILITY FUNCTIONS |

|||

# You should only need to edit these if you're making significant changes to the way the script works |

|||

# |

|||

pluralise() { |

|||

case "$1" in |

|||

""|0) return |

|||

;; |

|||

1) echo "$1 $2, " |

|||

;; |

|||

*) echo "$1 ${2}s, " |

|||

;; |

|||

esac |

|||

} |

|||

gst_progress() { |

|||

START_TIME="$( date +%s )" |

|||

MESSAGE= |

|||

PROGRESS_NEWLINE= |

|||

while read HEAD TAIL |

|||

do |

|||

if [ "$HEAD" = "progressreport0" ] |

|||

then |

|||

NOW_TIME="$( date +%s )" |

|||

echo -n $'\r'"$( echo -n "$MESSAGE" | tr -c '' ' ' )"$'\r' |

|||

MESSAGE="$( echo "$TAIL" | { |

|||

read TIME PROCESSED SLASH TOTAL REPLY |

|||

progress_message "" "$START_TIME" "$TOTAL" "$PROCESSED" |

|||

echo "$MESSAGE" |

|||

})" |

|||

PROGRESS_NEWLINE=$'\n' |

|||

else |

|||

echo "$PROGRESS_NEWLINE$HEAD $TAIL" >&2 |

|||

echo "$MESSAGE" >&2 |

|||

PROGRESS_NEWLINE= |

|||

fi |

|||

done |

|||

echo -n $'\r'"$( echo -n "$MESSAGE" | tr -c '' ' ' )"$'\r' >&2 |

|||

} |

|||

ffmpeg_progress() { |

|||

MESSAGE="$1..." |

|||

echo -n $'\r'"$MESSAGE" >&2 |

|||

while IFS== read PARAMETER VALUE |

|||

do |

|||

if [ "$PARAMETER" = out_time_ms ] |

|||

then |

|||

echo -n $'\r'"$( echo -n "$MESSAGE" | tr -c '' ' ' )"$'\r' >&2 |

|||

if [ -z "$TOTAL_TIME_MS" -o "$TOTAL_TIME_MS" = 0 ] |

|||

then |

|||

case $SPINNER in |

|||

\-|'') SPINNER=\\ ;; |

|||

\\ ) SPINNER=\| ;; |

|||

\| ) SPINNER=\/ ;; |

|||

\/ ) SPINNER=\- ;; |

|||

esac |

|||

MESSAGE="$1 $SPINNER" |

|||

else |

|||

if [ -n "$VALUE" -a "$VALUE" != 0 ] |

|||

then |

|||

TIME_REMAINING=$(( ( $(date +%s) - $START_TIME ) * ( $TOTAL_TIME_MS - $VALUE ) / $VALUE )) |

|||

HOURS_REMAINING=$(( $TIME_REMAINING / 3600 )) |

|||

MINUTES_REMAINING=$(( ( $TIME_REMAINING - $HOURS_REMAINING*3600 ) / 60 )) |

|||

SECONDS_REMAINING=$(( $TIME_REMAINING - $HOURS_REMAINING*3600 - $MINUTES_REMAINING*60 )) |

|||

HOURS_REMAINING="$( pluralise $HOURS_REMAINING hour )" |

|||

MINUTES_REMAINING="$( pluralise $MINUTES_REMAINING minute )" |

|||

SECONDS_REMAINING="$( pluralise $SECONDS_REMAINING second )" |

|||

MESSAGE_REMAINING="$( echo "$HOURS_REMAINING$MINUTES_REMAINING$SECONDS_REMAINING" | sed -e 's/, $//' -e 's/\(.*\),/\1 and/' )" |

|||

MESSAGE="$1 $(( 100 * VALUE / TOTAL_TIME_MS ))% ETA: $( date +%X -d "$TIME_REMAINING seconds" ) (about $MESSAGE_REMAINING)" |

|||

fi |

|||

fi |

|||

echo -n $'\r'"$MESSAGE" >&2 |

|||

elif [ "$PARAMETER" = progress -a "$VALUE" = end ] |

|||

then |

|||

echo -n $'\r'"$( echo -n "$MESSAGE" | tr -c '' ' ' )"$'\r' >&2 |

|||

return |

|||

fi |

|||

done |

|||

} |

|||

# convert 00:00:00.000 to a count in milliseconds |

|||

parse_time() { |

|||

echo "$(( $(date -d "1970-01-01T${1}Z" +%s )*1000 + $( echo "$1" | sed -e 's/.*\.\([0-9]\)$/\100/' -e 's/.*\.\([0-9][0-9]\)$/\10/' -e 's/.*\.\([0-9][0-9][0-9]\)$/\1/' -e '/^[0-9][0-9][0-9]$/! s/.*/0/' ) ))" |

|||

} |

|||

# get the full name of the script's directory |

|||

set_directory() { |

|||

if [ -z "$1" ] |

|||

then |

|||

echo "$HELP_MESSAGE" |

|||

exit 1 |

|||

else |

|||

DIRECTORY="$( readlink -f "$1" )" |

|||

FILE="$DIRECTORY/$( basename "$DIRECTORY" )" |

|||

fi |

|||

} |

|||

# actual commands that do something interesting: |

|||

CMD_GST="gst-launch-$GST_VERSION" |

|||

CMD_FFMPEG="ffmpeg -loglevel 23 -nostdin" |

|||

CMD_SOX="nice -n +20 sox" |

|||

# |

|||

# MAIN LOOP |

|||

# |

|||

case "$1" in |

|||

-i|--i|--in|--ini|--init) |

|||

if [ -e "$CONFIG_SCRIPT" ] |

|||

then |

|||

echo "Please delete $CONFIG_SCRIPT if you want to recreate it" |

|||

else |

|||

echo "$CONFIGURATION" > "$CONFIG_SCRIPT" |

|||

echo "Please edit $CONFIG_SCRIPT to match your system" |

|||

fi |

|||

;; |

|||

-p|--p|--pr|--pro|--prof|--profi|--profil|--profile) |

|||

sed -i "$CONFIG_SCRIPT" -e '/^GLOBAL_NOISE_PROFILE=.*/d' |

|||

echo "GLOBAL_NOISE_PROFILE='$( '$CMD_GST' -q alsasrc device="$AUDIO_DEVICE" ! wavenc ! fdsink | sox -t wav - -n trim 0 1 noiseprof | tr '\n' '\t' )'" >> "$CONFIG_SCRIPT" |

|||

echo "Updated $CONFIG_SCRIPT with global noise profile" |

|||

;; |

|||

-c|--c|--ca|--cap|--caps) |

|||

{ |

|||

echo 'Audio capabilities:' >&2 |

|||

"$CMD_GST" --gst-debug=alsa:5 alsasrc device=$AUDIO_DEVICE ! fakesink 2> >( sed -ne '/returning caps\|src caps/ { s/.*\( returning caps \| src caps \)/\t/ ; s/; /\n\t/g ; p }' | sort >&2 ) | head -1 >/dev/null |

|||

sleep 0.1 |

|||

echo 'Video capabilities:' >&2 |

|||

"$CMD_GST" --gst-debug=v4l2:5,v4l2src:3 v4l2src device=$VIDEO_DEVICE ! fakesink 2> >( sed -ne '/probed caps:\|src caps/ { s/.*\(probed caps:\|src caps\) /\t/ ; s/; /\n\t/g ; p }' | sort >&2 ) | head -1 >/dev/null |

|||

} 2>&1 |

|||

;; |

|||

-r|--rec|--reco|--recor|--record) |

|||

# Build a pipeline with sources being encoded as MPEG4 video and FLAC audio, then being muxed into a Matroska container. |

|||

# FLAC and Matroska are used during encoding to ensure we don't lose much data between passes |

|||

set_directory "$2" |

|||

mkdir -p -- "$DIRECTORY" || exit |

|||

if [ -e '$FILE.pid' ] |

|||

then |

|||

echo "Already recording a video in this directory" |

|||

exit |

|||

fi |

|||

if [ -e "$FILE.mkv" ] |

|||

then |

|||

echo "Please delete the old $FILE.mkv before making a new recording" |

|||

exit 1 |

|||

fi |

|||

[ -n "$VIDEO_INPUT" ] && v4l2-ctl --device="$VIDEO_DEVICE" --set-input $VIDEO_INPUT > >( grep -v '^Video input set to' ) |

|||

date +"%c: started recording $FILE.mkv" |

|||

# trap keyboard interrupt (control-c) |

|||

trap kill_gstreamer 0 SIGHUP SIGINT SIGQUIT SIGABRT SIGKILL SIGALRM SIGSEGV SIGTERM |

|||

kill_gstreamer() { [ -e "/proc/$(< "$FILE.pid" )" ] && kill -s 2 "$(< "$FILE.pid" )" ; } |

|||

sh -c "echo \$\$ > '$FILE.pid' && \ |

|||

exec $CMD_GST -q -e \ |

|||

$GST_VIDEO_SRC ! x264enc $GST_X264_OPTS ! progressreport update-freq=1 ! mux. \ |

|||

$GST_AUDIO_SRC ! flacenc $GST_FLAC_OPTS ! mux. \ |

|||

matroskamux name=mux $GST_MKV_OPTS ! filesink location='$FILE.mkv'" \ |

|||

2> >( grep -v 'Source ID [0-9]* was not found when attempting to remove it' ) \ |

|||

| \ |

|||

while read FROM TIME REMAINDER |

|||

do [ "$FROM" = progressreport0 ] && echo -n $'\r'"$( date +"%c: recorded ${TIME:1:8} - press ctrl+c to finish" )" >&2 |

|||

done |

|||

trap '' 0 SIGHUP SIGINT SIGQUIT SIGABRT SIGKILL SIGALRM SIGSEGV SIGTERM |

|||

echo >&2 |

|||

date +"%c: finished recording $FILE.mkv" |

|||

rm -f "$FILE.pid" |

|||

cat <<EOF > "$FILE-remaster.sh" |

|||

#!$0 --remaster |

|||

# |

|||

# The original $( basename $FILE ).mkv accurately represents the source. |

|||

# If you would like to get rid of imperfections in the source (e.g. |

|||

# splitting it into segments), edit then run this file. |

|||

# |

|||

# *** REMASTERING OPTIONS *** |

|||

# |

|||

# AUDIO DELAY |

|||

# |

|||

# To add a period of silence at the beginning of the video, watch the .mkv |

|||

# file and decide how much silence you want. |

|||

# |

|||

# If you want to add a delay, set this variable to the duration in seconds |

|||

# (can be fractional): |

|||

# |

|||

audio_delay ${AUDIO_DELAY:-0.0} |

|||

# |

|||

# ORIGINAL FILE |

|||

# |

|||

# This is the original file to be remastered: |

|||

original "$( basename $FILE ).mkv" |

|||

# |

|||

# SEGMENTS |

|||

# |

|||

# You can split a video into one or more files. To create a remastered |

|||

# segment, add a line like this: |

|||

# |

|||

# segment "name of output file.avi" "start time" "end time" |

|||

# |

|||

# "start time"/"end time" is optional, and specifies the part of the file |

|||

# that will be used for the segment |

|||

# |

|||

# Here are some examples - remove the leading '#' to make one work: |

|||

# remaster the whole file in one go: |

|||

# segment "$( basename $FILE ).avi" |

|||

# split into two parts just over and hour: |

|||

# segment "$( basename $FILE ) part 1.avi" "00:00:00" "01:00:05" |

|||

# segment "$( basename $FILE ) part 2.avi" "00:59:55" "01:00:05" |

|||

EOF |

|||

chmod 755 "$FILE-remaster.sh" |

|||

cat <<EOF |

|||

To remaster this recording, see $FILE-remaster.sh |

|||

EOF |

|||

;; |

|||

-k|--k|--ki|--kil|--kill) |

|||

set_directory "$2" |

|||

if [ -e "$FILE.pid" ] |

|||

then |

|||

if [ -n "$3" ] |

|||

then |

|||

date +"Will \`kill -INT $(< "$FILE.pid" )\` at %X..." -d "+$( echo "$3" | sed -e 's/h/ hour/' -e 's/m/ minute/' -e 's/^\([0-9]*\)s\?$/\1 second/' )" \ |

|||

&& sleep "$3" \ |

|||

|| exit 0 |

|||

fi |

|||

kill -s 2 "$(< "$FILE.pid" )" \ |

|||

&& echo "Ran \`kill -INT $(< "$FILE.pid" )\` at %" |

|||

else |

|||

echo "Cannot kill - not recording in $DIRECTORY" |

|||

fi |

|||

;; |

|||

-m|--rem|--rema|--remas|--remast|--remaste|--remaster) |

|||

# we use ffmpeg and sox here, as they have better remastering tools and GStreamer doesn't offer any particular advantages |

|||

HAVE_REMASTERED= |

|||

# so people that don't understand shell scripts don't have to learn about variables: |

|||

audio_delay() { |

|||

if [[ "$1" =~ ^[0.]*$ ]] |

|||

then AUDIO_DELAY= |

|||

else AUDIO_DELAY="$1" |

|||

fi |

|||

} |

|||

original() { ORIGINAL="$1" ; } |

|||

# build a segment: |

|||

segment() { |

|||

SEGMENT_FILENAME="$1" |

|||

SEGMENT_START="$2" |

|||

SEGMENT_END="$3" |

|||

if [ -e "$SEGMENT_FILENAME" ] |

|||

then |

|||

read -p "Are you sure you want to delete the old $SEGMENT_FILENAME (y/N)? " |

|||

if [ "$REPLY" = "y" ] |

|||

then rm -f "$SEGMENT_FILENAME" |

|||

else return |

|||

fi |

|||

fi |

|||

# Calculate segment: |

|||

if [ -z "$SEGMENT_START" ] |

|||

then |

|||

SEGMENT_START_OPTS= |

|||

SEGMENT_END_OPTS= |

|||

else |

|||

SEGMENT_START_OPTS="-ss $SEGMENT_START" |

|||

SEGMENT_END_OPTS="$(( $( parse_time "$SEGMENT_END" ) - $( parse_time "$SEGMENT_START" ) ))"; |

|||

TOTAL_TIME_MS="${SEGMENT_END_OPTS}000" # initial estimate, will calculate more accurately later |

|||

SEGMENT_END_OPTS="-t $( echo "$SEGMENT_END_OPTS" | sed -e s/\\\([0-9][0-9][0-9]\\\)$/.\\\1/ )000" |

|||

fi |

|||

AUDIO_FILE="${SEGMENT_FILENAME/\.*/.wav}" |

|||

CURRENT_STAGE=1 |

|||

if [ -e "$AUDIO_FILE" ] |

|||

then STAGE_COUNT=2 |

|||

else STAGE_COUNT=3 |

|||

fi |

|||

[ -e "$AUDIO_FILE" ] || echo "Edit audio file $AUDIO_FILE and rerun to include hand-crafted audio" |

|||

START_TIME="$( date +%s )" |

|||

while IFS== read PARAMETER VALUE |

|||

do |

|||

if [ "$PARAMETER" = frame ] |

|||

then FRAME=$VALUE |

|||

else |

|||

[ "$PARAMETER" = out_time_ms ] && OUT_TIME_MS="$VALUE" |

|||

echo $PARAMETER=$VALUE |

|||

fi |

|||

TOTAL_TIME_MS=$OUT_TIME_MS |

|||

FRAMERATE="${FRAME}000000/$OUT_TIME_MS" |

|||

done < <( $CMD_FFMPEG $SEGMENT_START_OPTS -i "$ORIGINAL" $SEGMENT_END_OPTS -vcodec copy -an -f null /dev/null -progress /dev/stdout < /dev/null ) \ |

|||

> >( ffmpeg_progress "$SEGMENT_FILENAME: $CURRENT_STAGE/$STAGE_COUNT calculating framerate" ) |

|||

CURRENT_STAGE=$(( CURRENT_STAGE + 1 )) |

|||

# Build audio file for segment: |

|||

MESSAGE= |

|||

if ! [ -e "$AUDIO_FILE" ] |

|||

then |

|||

START_TIME="$( date +%s )" |

|||

# Step one: extract audio |

|||

$CMD_FFMPEG -y -progress >( ffmpeg_progress "$SEGMENT_FILENAME: extracting audio" ) $SEGMENT_START_OPTS -i "$ORIGINAL" $SEGMENT_END_OPTS -vn -f wav >( |

|||

case "${AUDIO_DELAY:0:1}X" in # Step two: shift the audio according to the audio delay |

|||

X) |

|||

# no audio delay |

|||

cat |

|||

;; |

|||

-) |

|||

# negative audio delay - trim start |

|||

$CMD_SOX -V1 -t wav - -t wav - trim 0 "${AUDIO_DELAY:1}" |

|||

;; |

|||

*) |

|||

# positive audio delay - prepend silence |

|||

$CMD_SOX -t wav <( $CMD_SOX -n -r "$AUDIO_BITRATE" -c 2 -t wav - trim 0.0 "$AUDIO_DELAY" ) -t wav - |

|||

;; |

|||

esac | \ |

|||

\ |

|||

if [ -z "$GLOBAL_NOISE_PROFILE" ] # Step three: denoise based on the global noise profile, then normalise audio levels |

|||

then $CMD_SOX -t wav - "$AUDIO_FILE" norm -1 |

|||

else $CMD_SOX -t wav - "$AUDIO_FILE" noisered <( echo "$GLOBAL_NOISE_PROFILE" | tr '\t' '\n' ) "${GLOBAL_NOISE_AMOUNT:-0.21}" norm -1 |

|||

fi 2> >( grep -vF 'sox WARN wav: Premature EOF on .wav input file' ) |

|||

) < /dev/null |

|||

CURRENT_STAGE=$(( CURRENT_STAGE + 1 )) |

|||

fi |

|||

echo -n $'\r'"$( echo -n "$MESSAGE" | tr -c '' ' ' )"$'\r' >&2 |

|||

# Build video file for segment: |

|||

START_TIME="$( date +%s )" |

|||

$CMD_FFMPEG \ |

|||

-progress file://>( ffmpeg_progress "$SEGMENT_FILENAME: $CURRENT_STAGE/$STAGE_COUNT creating video" ) \ |

|||

$SEGMENT_START_OPTS -i "$ORIGINAL" \ |

|||

-i "$AUDIO_FILE" \ |

|||

-map 1:0 -map 0:1 \ |

|||

-r "$FRAMERATE" \ |

|||

$SEGMENT_END_OPTS \ |

|||

$FFMPEG_VIDEO_OPTS $FFMPEG_AUDIO_OPTS \ |

|||

"$SEGMENT_FILENAME" \ |

|||

< /dev/null |

|||

sleep 0.1 # quick-and-dirty way to ensure ffmpeg_progress finishes before we print the next line |

|||

echo "$SEGMENT_FILENAME saved" |

|||

HAVE_REMASTERED=true |

|||

} |

|||

SCRIPT_FILE="$( readlink -f "$2" )" |

|||

cd "$( dirname "$SCRIPT_FILE" )" |

|||

source "$SCRIPT_FILE" |

|||

if [ -z "$HAVE_REMASTERED" ] |

|||

then echo "Please specify at least one segment" |

|||

fi |

|||

;; |

|||

*) |

|||

echo "$HELP_MESSAGE" |

|||

esac</nowiki> |

|||

This script generates a video in two passes: first it records and builds statistics, then lets you analyse the output, then builds an optimised final version. |

|||

=== Bash script to record video tapes with entrans === |

|||

<nowiki>#!/bin/bash |

|||

targetdirectory="~/videos" |

|||

# Test ob doppelt geöffnet |

|||

if [[ -e "~/.lock_shutdown.digitalisieren" ]]; then |

|||

echo "" |

|||

echo "" |

|||

echo "Capturing already running. It is impossible to capture to tapes simultaneously. Hit a key to abort." |

|||

read -n 1 |

|||

exit |

|||

fi |

|||

# trap keyboard interrupt (control-c) |

|||

trap control_c 0 SIGHUP SIGINT SIGQUIT SIGABRT SIGKILL SIGALRM SIGSEGV SIGTERM |

|||

control_c() |

|||

# run if user hits control-c |

|||

{ |

|||

cleanup |

|||

exit $? |

|||

} |

|||

cleanup() |

|||

{ |

|||

rm ~/.lock_shutdown.digitalisieren |

|||

return $? |

|||

} |

|||

touch "~/.lock_shutdown.digitalisieren" |

|||

echo "" |

|||

echo "" |

|||

echo "Please enter the length of the tape in minutes and press ENTER. (Press Ctrl+C to abort.)" |

|||

echo "" |

|||

while read -e laenge; do |

|||

if [[ $laenge == [0-9]* ]]; then |

|||

break 2 |

|||

else |

|||

echo "" |

|||

echo "" |

|||

echo "That's not a number." |

|||

echo "Please enter the length of the tape in minutes and press ENTER. (Press Ctrl+C to abort.)" |

|||

echo "" |

|||

fi |

|||

done |

|||

let laenge=laenge+10 # Sicherheitsaufschlag, falls Band doch länger |

|||

let laenge=laenge*60 |

|||

echo "" |

|||

echo "" |

|||

echo "Please type in the description of the tape." |

|||

echo "Don't forget to rewind the tape?" |

|||

echo "Hit ENTER to start capturing. Press Ctrl+C to abort." |

|||

echo "" |

|||

read -e name; |

|||

name=${name//\//_} |

|||

name=${name//\"/_} |

|||

name=${name//:/_} |

|||

# Falls Name schon vorhanden |

|||

if [[ -e "$targetdirectory/$name.mpg" ]]; then |

|||

nummer=0 |

|||

while [[ -e "$targetdirectory/$name.$nummer.mpg" ]]; do |

|||

let nummer=nummer+1 |

|||

done |

|||

name=$name.$nummer |

|||

fi |

|||

# Audioeinstellungen setzen: unmuten, Regler |

|||

amixer -D pulse cset name='Capture Switch' 1 >& /dev/null # Aufnahme-Kanal einschalten |

|||

amixer -D pulse cset name='Capture Volume' 20724 >& /dev/null # Aufnahme-Pegel einstellen |

|||

# Videoinput auswählen und Karte einstellen |

|||

v4l2-ctl --set-input 3 >& /dev/null |

|||

v4l2-ctl -c saturation=80 >& /dev/null |

|||

v4l2-ctl -c brightness=130 >& /dev/null |

|||

let ende=$(date +%s)+laenge |

|||

echo "" |

|||

echo "Working" |

|||

echo "Capturing will be finished at "$(date -d @$ende +%H.%M)"." |

|||

echo "" |

|||

echo "Press Ctrl+C to finish capturing now." |

|||

nice -n -10 entrans -s cut-time -c 0-$laenge -m --dam -- --raw \ |

|||

v4l2src queue-size=16 do-timestamp=true device=$VIDEO_DEVICE norm=PAL-BG num-buffers=-1 ! stamp sync-margin=2 sync-interval=5 silent=false progress=0 ! \ |

|||

queue leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! dam ! \ |

|||

cogcolorspace ! videorate ! \ |

|||

'video/x-raw-yuv,width=720,height=576,framerate=25/1,interlaced=true,aspect-ratio=4/3' ! \ |

|||

queue leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! \ |

|||

ffenc_mpeg2video rc-buffer-size=1500000 rc-max-rate=7000000 rc-min-rate=3500000 bitrate=4000000 max-key-interval=15 pass=pass1 ! \ |

|||

queue leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! mux. \ |

|||

pulsesrc buffer-time=2000000 do-timestamp=true ! \ |

|||

queue leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! dam ! \ |

|||

audioconvert ! audiorate ! \ |

|||

audio/x-raw-int,rate=48000,channels=2,depth=16 ! \ |

|||

queue max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! \ |

|||

ffenc_mp2 bitrate=192000 ! \ |

|||

queue leaky=2 max-size-buffers=0 max-size-time=0 max-size-bytes=0 ! mux. \ |

|||

ffmux_mpeg name=mux ! filesink location=\"$targetdirectory/$name.mpg\" >& /dev/null |

|||

echo "Finished Capturing" |

|||

rm ~/.lock_shutdown.digitalisieren</nowiki> |

|||

The script uses a command line similar to [[GStreamer#Record_to_DVD-compliant_MPEG2|this]] to produce a DVD compliant MPEG2 file. |

|||

* The script aborts if another instance is already running. |

|||

* If not it asks for the length of the tape and its description |

|||

* It records to ''description.mpg'' or if this file already exists to ''description.0.mpg'' and so on for the given time plus 10 minutes. The target-directory has to be specified in the beginning of the script. |

|||

* As setting of the inputs and settings of the capture device is only partly possible via GStreamer other tools are used. |

|||

* Adjust the settings to match your input sources, the recording volume, capturing saturation and so on. |

|||

==Further documentation resources== |

==Further documentation resources== |

||

* [[V4L_capturing|V4L Capturing]] |

|||

* [http://gstreamer.freedesktop.org/ Gstreamer project] |

* [http://gstreamer.freedesktop.org/ Gstreamer project] |

||

* [http://gstreamer.freedesktop.org/data/doc/gstreamer/head/faq/html/ FAQ] |

* [http://gstreamer.freedesktop.org/data/doc/gstreamer/head/faq/html/ FAQ] |

||

Revision as of 01:23, 4 September 2015